For the true technologist there is a clear progression from Relational Databases to Objective Databases (OO or ORM) to Graph Databases (including Linked Data Triple/Quad Stores). It is possible to “automatically devolve” (for want of a better phrase) newer data structures into the old data structures… but that’s not what I am trying to get to today.

I’m coming across many technologists who are forming cliques, and their language is becoming restricted to their cliques. This is worrying, because it forms islands which don’t trade (to use business terminology). Not only this, but it also restricts access to the average person in the street, the technologies and tools that these islands create can become more and more distant from their potential users.

The idea of “Whirling Databases” is not to see “Databases” in terms of a specific data structure or data management system, but to see databases as a generic repository for information, capable of inputting and outputting data in different formats and frameworks. In a Linked Data system, data needs to “whirl” around the web using “links” as their travelling routes. We should work together, collaboratively and collectively to achieve this.

As some of you know, I’ve recently been working quite closely with OpenLink Software to help them help others learn about Linked Data. Linked Data, as a generic term, is an incredibly powerful tool - and a tool that should never get bogged down in frameworks (such as RDF) or formats (such as RDF/XML), it should be applicable to all frameworks and formats capable of providing outbound links, and capable of receiving inbound links. I’ve been working with Virtuoso Universal Server solidly for over a year now (not just with OpenLink Software, but with other businesses too), and I truly believe that allows for this travelling via “links” in Linked Data for a variety of frameworks and formats - this is powerful stuff!

Note: This guide is part two of the previous blog post on Importing Linked Data into a Spreadsheet.

Introduction and Theory:

Say you don’t want your data in Google Spreadsheet, but would prefer it in Excel, OpenOffice, LibreOffice or some kind of standalone desktop application on your computer. There is still potential to work with dynamic Linked Data - via the powers of WebDAV (which is a technology allowing the establishment of an “online hard drive” over the protocols that power the world-wide-web).

A WebDAV URL is also a Data Source Name (aka an “address”), you see it is capable of being linked to as it is a URL - it is still Linked Data, and yet it can be treated as a store. This is the one of the many powers of the Linked Data Web.

Once the Method Part One is done there are two options for the tutorial, the first “Part 2″ is dealing with the data in LibreOffice (and I presume that the process is very similar in contemporary versions of OpenOffice), the second “Part 2″ is for dealing with the data in Excel (I’ve used version 2010 on Windows 7).

Prerequisites:

- You will need a copy of Virtuoso on your machine up and running (the enterprise edition and the open source edition should both work). You must also have administrative access to it.

- A new-ish version of LibreOffice, OpenOffice or Microsoft Excel

- An operating system that can cope with WebDAV (which seems to be most of them these days - to varying degrees of success)

An Example Query:

Ideally this method is ideal for fast-paced data, the data that changes often - such as statistics or locations of crime etc. However, for now I’ve just used a simple lat-long search of those “areas” that touch my local area of “Long Ashton and Wraxall”.

1 | SELECT DISTINCT ?TouchesAreaURI, ?TouchesName, ?TouchesAreaLat, ?TouchesAreaLong |

2 | WHERE { |

3 | <https://data.ordnancesurvey.co.uk/id/7000000000000770> <https://data.ordnancesurvey.co.uk/ontology/spatialrelations/touches> ?TouchesAreaURI . |

4 | GRAPH ?TouchesAreaURI { |

5 | ?TouchesAreaURI <https://www.w3.org/2000/01/rdf-schema#label> ?TouchesName; |

6 | <https://www.w3.org/2003/01/geo/wgs84_pos#lat> ?TouchesAreaLat; |

7 | <https://www.w3.org/2003/01/geo/wgs84_pos#long> ?TouchesAreaLong |

8 | } |

9 | } |

Method Part One: Generic

As mentioned in the prerequisites - you will need administrative access to Virtuoso in order to fully run through this tutorial. This is because we need to create folders which need to be attached to the SPARQL user in order for the /sparql endpoint to save to WebDAV.

- Administrative Setup:

- Login to Conductor

- Go to System Admin > User Accounts

- Click “Edit” next to the SPARQL user

- Change the following:

- DAV Home Path: /DAV/home/QL/ (you could call the “QL” folder whatever you like - just remember what you’ve changed it to)

- DAV Home Path “create”: Checked

- Default Permissions: all checked

- User Type: SQL/ODBC and WebDAV

- Click Save.

- Go to Web Application Server > Content Management > Repository

- Navigate the WebDAV to: DAV/home/QL (or whatever you named “QL”)

- Click the New Folder Icon (it looks like a folder with an orange splodge on the top-left)

- Make a new folder:

- Name: saved-sparql-results (must not be different!)

- Permissions: all checked

- Folder Type: Dynamic Resources

- Click Create

- Query and Data Setup:

- Hit https://<server>:<port-usually-8890>/sparql

- Enter the SPARQL query (e.g. The Example Query above)

- Change the following:

- Change to a grab everything type query - i.e. “Try to download all referenced resources (this may be very slow and inefficient)”. Or one of the other options - dependent on the locations, data and the query.

- Format Results as: Spreadsheet (or CSV)

- “Display the result and do not save” change this to “Save the result to the DAV and refresh it periodically with the specified name:”

- Add a filename (with file extension). For example testspreadsheet.xls (or testspreadsheet.csv)

- Click “Run Query”

- You’ll know see a “Done” screen, with the URI of the result, this is a WebDAV accessible URL. Please take note of the URL of the “saved-sparql-results”, it should look a little like this: https://<server>:<port-usually-8890>DAV/home/QL/saved-sparql-results

Method Part Two A: For LibreOffice and OpenOffice users

You will need to do the following in order to connect to a WebDAV folder:

- Tools > Options > LibreOffice/OpenOffice > General

- and ensure that “Use LibreOffice/OpenOffice dialogue boxes” is turned on.

You will be linking dynamically from your spreadsheet to the resource on your WebDAV instance:

- Start a new spreadsheet, or load up a spreadsheet where you want the resource to go.

- Go to Insert > Link to External Data

- Click the “…” button

- Enter your “saved-sparql-results” URL (not including the filename itself!), and press enter

- You should now see your “saved-sparql-results” WebDAV directory. Select the file, and click insert. The program will then probably ask you for your dav login details (you may want to make the program remember the details), it may also ask you about the format of the file - just follow that through how you would normally when importing/opening a file. You may also have to select “HTML_all” if you chose the “Spreadsheet option” in the sparql interface.

- Check the “Update every” box, and change the time to a suitable time based on the data.

- Finally, press the “OK” button… and you’ll see your lovely Linked Data inside your spreadsheet. Then you’ll be able to do whatever you want to your data (e.g. create a graph, do some calculations etc etc) - and everything will update when the data is updated. Funky!

Method Part Two B: For Excel users

OK, so I’m not a native Windows user (I used Mac OS and Amiga OS in my childhood, before moving to Unix and Linux based operating systems in about 2001). What I have found is that Windows 7 and Excel go a little strange with WebDAV, they like certain configurations - so I’ll be showing you a reasonably bodgy way of doing this :-P

- Prerequisite: In step 2.c of Generic Method One - save the results as HTML, and make sure the file extension is also .html

- Open Excel (I’m using Excel 2010)

- Click on the Data menu

- Click “From Web”

- In the address bar enter your “saved-sparql-queries” URL, press enter - this will probably ask you to enter your dav username and password

- Click on your <filename>.html file - you should then see the HTML Table

- Press the Import button

- A dialogue will pop up asking about where you would like to place your data - for ease I use the default.

- You’ll see the data! The important thing to note is that this is Linked Data - however, it is not quite self-updating yet. In order to do that we need to set the connection properties… so…

- Select the imported data

- Click “properties” which is in the “Connections” subpanel of the “Data” menu

- Change the “Refresh Every”, and/or check the “Refresh data when opening the file”. Click ok.

- Self-updating Excel spreadsheets from Linked Data. Funky!

Documentation Resources

- Importing Linked Data into a Spreadsheet Part 1 (Google Spreadsheets)

- LibreOffice: “Opening a Document Using WebDAV over HTTPS”

- OpenLink Software Documentation of the SPARQL implementation in Virtuoso and its endpoint

Software Resources

- OpenLink Virtuoso

- Virtuoso Universal Server - Proprietary Edition

- Virtuoso Open Source Edition

- LibreOffice

- OpenOffice

- Microsoft Office

I hope that all works for me, and feel free to share any ideas or findings!

What?

![]() This article goes through the details of verifying a WebID certificate using REST built in a PHP client. It will connect to an OpenLink Virtuoso service for WebID verification.

This article goes through the details of verifying a WebID certificate using REST built in a PHP client. It will connect to an OpenLink Virtuoso service for WebID verification.

WebID is a new technology for carrying your identity with you, essentially you store your identity in the form of a certificate in your browser, and this certificate can be verified against a WebID service. WebID is a combination of technologies (notably FOAF (Friend of a Friend) and SSL (Secure Socket Layer)). If you haven’t got yourself a WebID yet, then you can pick one up at any ODS installation (for instance: https://id.myopenlink.net/ods ) and you can find them under then Security tab when editing your profile. To learn more about the WebID standard please visit: https://www.w3.org/wiki/WebID For more information about generating a WebID through ODS please see: https://www.openlinksw.com/wiki/main/ODS/ODSX509GenerateWindows

REST (or Representational State Transfer) is a technique for dealing with a resource at a location. The technologies used are usually HTTP (HyperText Transfer Protocol), the resource is usually in some standardised format (such as XML or JSON) and the location is specified by a URL (Uniform Resource Locator). These are pretty standardised and contemporary tools and techniques that are used on the World Wide Web.

PHP is a programming/scripting language usually used for server-side development. It is a very flexible language due to its dynamic-weak typing and its capability of doing both object-oriented and proceedural programming. Its server-side usage is often “served” using hosting software such as Apache HTTP Server or OpenLink Virtuoso Universal Server. To learn more about PHP visit: https://www.php.net/

Virtuoso is a “Universal Server” - it contains within it, amongst other things, a database server, a web hosting server and a semantic data triple store. It is capable of working with all of the technologies above - REST, PHP and WebID - along with other related technologies (e.g. hosting other server-side languages, dealing with SQL and SPARQL, providing WebDAV etc etc). It comes in two forms: an enterprise edition and an open source edition, and is installable anywhere (including cloud-based servers such as Amazon EC2). To learn more about Virtuoso please visit: https://virtuoso.openlinksw.com/

ODS (OpenLink Data Spaces) is a linked data web application for hosting and manipulating personal, social and business data. It holds within it packages for profiling, webdav file storage, feed reading, address book storage, calendar, bookmarking, photo gallery and many other functions that you would expect from a social website. ODS is built on top of Virtuoso. To learn more about ODS please visit: https://ods.openlinksw.com/wiki/ODS/

Why?

Identity is an important issue for trust on the web, and it comes from two perspectives:

- When a user accesses a website they want to know that their identity remains theirs, and that they can log in easily without duplicating effort.

- When a developer builds a web application they want to know that the users accessing their site are who they say they are.

WebID handles this through interlinking using URIs over HTTP, profiling using the FOAF standard, and security using the SSL standard. From a development point of view it is necessary to verify a user, and this is the reason for writing this article.

How?

To make things a lot easier OpenLink Software have created a service built into their ODS Framework which verifies a certificate provider with an issued certificate. The URL for the web service is: https://id.myopenlink.net/ods/webid_verify.vsp

This webservice takes the following HTTP Get Parameter:

| callback | string |

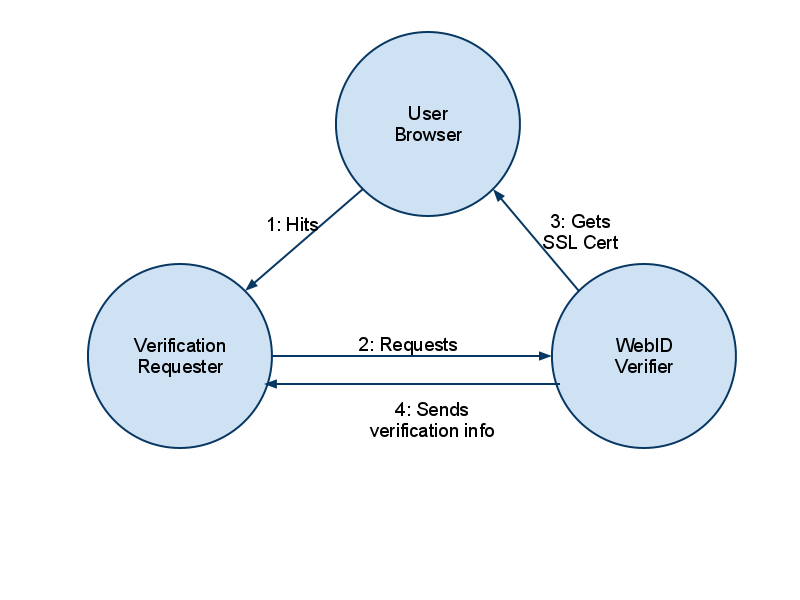

The callback is the URL that you want the success/failure information to be returned to. The cleverness actually comes from the fact that the service also tests your SSL certificate information which is stored in the header information that the browser sends across, this is a three agent system. The three agent system could be shown a bit like this:

So we can start to build up a picture of how a “Verification Requester” might look like:

- First Page: Send user to the “Verifier” with the relevant Callback URL

- Callback Page: Receive details from the verifier - details will be found in the HTTP Parameters.

- If a WebID URI is returned then you know everything is ok

- If an error is returned then the WebID has not been verified

Lets build something then, we shall build a simple single page script which does different things based on whether it has in the first pass through or the second….

(example code based on code written by OpenLink Software Ltd)…

1 | <?php |

2 | function apiURL() |

3 | { |

5 | $pageURL .= $_SERVER['SERVER_PORT'] <> '80' ? $_SERVER['SERVER_NAME'] . ':' . $_SERVER['SERVER_PORT'] : $_SERVER['SERVER_NAME']; |

6 | return $pageURL . '/ods/webid_demo.php'; |

7 | } |

8 | $_webid = isset ($_REQUEST['webid']) ? $_REQUEST['webid'] : ''; |

9 | $_error = isset ($_REQUEST['error']) ? $_REQUEST['error'] : ''; |

10 | $_action = isset ($_REQUEST['go']) ? $_REQUEST['go'] : ''; |

11 | if (($_webid == '') && ($_error == '')) |

12 | { |

13 | if ($_action <> '') |

14 | { |

15 | if ($_SERVER['HTTPS'] <> 'on') |

16 | { |

17 | $_error = 'No certificate'; |

18 | } |

19 | else |

20 | { |

21 | $_callback = apiURL(); |

22 | $_url = sprintf ('https://id.myopenlink.net/ods/webid_verify.vsp?callback=%s', urlencode($_callback)); |

23 | header (sprintf ('Location: %s', $_url)); |

24 | return; |

25 | } |

26 | } |

27 | } |

28 | ?> |

This first bit of code (above) simply deals with redirecting the user process to the Verifier service with the relevant (dynamic) Callback URL. You will notice that it only redirects when the “go” request is set - this is for demonstration purposes. We shall continue….

1 | <html> |

2 | <head> |

3 | <title>WebID Verification Demo - PHP</title> |

4 | </head> |

5 | <body> |

6 | <h1>WebID Verification Demo</h1> |

7 | <div> |

8 | This will check your X.509 Certificate's WebID watermark. <br/>Also note this service supports ldap, http, mailto, acct scheme based WebIDs. |

9 | </div> |

10 | <br/> |

11 | <br/> |

12 | <div> |

13 | <form method="get"> |

14 | <input type="submit" name="go" value="Check"/> |

15 | </form> |

16 | </div> |

17 | <?php |

18 | if (($_webid <> '') || ($_error <> '')) |

19 | { |

20 | ?> |

21 | <div> |

22 | The return values are: |

23 | <ul> |

24 | <?php |

25 | if ($_webid <> '') |

26 | { |

27 | ?> |

28 | <li>WebID - <?php print ($_webid); ?></li> |

29 | <li>Timestamp in ISO 8601 format - <?php print ($_REQUEST['ts']); ?></li> |

30 | <?php |

31 | } |

32 | if ($_error <> '') |

33 | { |

34 | ?> |

35 | <li>Error - <?php print ($_error); ?></li> |

36 | <?php |

37 | } |

38 | ?> |

39 | </ul> |

40 | </div> |

41 | <?php |

42 | } |

43 | ?> |

44 | </body> |

45 | </html> |

This second part of the code is twofold:

- Firstly, it displays a simple form with a “go” button - this is simply to demonstrate the “redirection” part of the code

- Secondly, this is where we print out the results from what we’ve callback’d. You’ll see that we try to print out the WebID URI, the Timestamp and any Error message.

What is great about the above code is that this can be run on any server that has PHP installed, it doesn’t need to be installed specifically on Apache HTTP Server, nor on OpenLink Virtuoso - it could be installed on any HTTP server with PHP hosting. It could even be adapted to be the Ruby programming language, Python, Perl, ASP or any server-side language, scripting language (including Javascript), or standalone programming language.

Thing is this not only works with http: WebIDs it can work with ldap:, mailto:, or acct: WebIDs too! Kingsley Idehen demonstrates this to us in his twitpic

Grab the full code here

I’ve recently come into contact with the usefulness of the ImportXML() function (and the related ImportHTML() and ImportFeed() functions) found in Google Docs Spreadsheet app [1], and their usefulness is to do with Uniform Resource Locators (URLs). This was partly thanks to Kingsley Idehens example Google Spreadsheet on the “Patriots Players” (and his tweet dated 22nd June at 10:11am), and so I wanted to see how it was done and maybe make something that wasn’t about American Football.

URLs are the actual addresses for Data, they are the “Data Source/Location Names” (DSNs). With the ImportHtml() (et al) functions you just have to plug in a URL and your data is displayed in the spreadsheet. Of course this is dependent on the structure of the data at that address, but you get the picture.

Let me show you an example based on a SPARQL query I created many moons ago:

SELECT DISTINCT ?NewspaperURI ?Newspaper ?Stance WHERE {

?NewspaperURI rdf:type dbpedia-owl:Newspaper ;

rdfs:label ?Newspaper ;

dcterms:subject <https://dbpedia.org/resource/Category:Newspapers_published_in_the_United_Kingdom>;

<https://dbpedia.org/property/political> ?Stance .

FILTER (lang(?Stance) = "en") .

FILTER (lang(?Newspaper) = "en")

}

ORDER BY ?Stance

Briefly, what the above does is shows a Newspaper URI, a Newspaper name and the Newspapers political stance - and these are limited to just those newspapers published in the United Kingdom. The result is a little messy as not all of the newspapers have the same style of label, and some of the newspapers stances will be hidden behind a further set of URIs - but this is just as an example.

Now we can plug this in to a sparql endpoint such as:

- https://dbpedia.org/sparql or

- https://lod.openlinksw.com/sparql

We shall use the 2nd for now - if you hit that in your browser than you’ll be able to plug it into a nicely made HTML form and fire that off to generate an HTML webpage. [2]

However, it isn’t entirely useful as we want to get in into a Google Spreadsheet! So, we need to modify the URL that the form creates. Firstly, copy the URL as it is… for instance…

https://lod.openlinksw.com/sparql?default-graph-uri=&should-sponge=&query=SELECT+DISTINCT+%3FNewspaperURI+%3FNewspaper+%3FStance+WHERE+{%0D%0A%3FNewspaperURI+rdf%3Atype+dbpedia-owl%3ANewspaper+%3B%0D%0A++rdfs%3Alabel+%3FNewspaper+%3B%0D%0A++dcterms%3Asubject+%3Chttp%3A%2F%2Fdbpedia.org%2Fresource%2FCategory%3ANewspapers_published_in_the_United_Kingdom%3E%3B%0D%0A+%3Chttp%3A%2F%2Fdbpedia.org%2Fproperty%2Fpolitical%3E+%3FStance+.+%0D%0AFILTER+%28lang%28%3FStance%29+%3D+%22en%22%29+.+%0D%0AFILTER+%28lang%28%3FNewspaper%29+%3D+%22en%22%29%0D%0A}%0D%0AORDER+BY+%3FStance&debug=on&timeout=&format=text%2Fhtml&CXML_redir_for_subjs=121&CXML_redir_for_hrefs=&save=display&fname=

Then where it says “text%2Fhtml” (which means that it is of text/html type) change it to “application%2Fvnd.ms-excel” (which means that it is of application/vnd.ms-excel type - in other words a spreadsheet-friendly table). In our example this would make the URL look like…

We then need to plug this into our Google Spreadsheet - so open a new spreadsheet, or create a new sheet, or go to where you want to place the data on that sheet.

Then click on one of the cells, and enter the following function:

=ImportHtml("https://lod.openlinksw.com/sparql?default-graph-uri=&should-sponge=&query=SELECT+DISTINCT+%3FNewspaperURI+%3FNewspaper+%3FStance+WHERE+{%0D%0A%3FNewspaperURI+rdf%3Atype+dbpedia-owl%3ANewspaper+%3B%0D%0A++rdfs%3Alabel+%3FNewspaper+%3B%0D%0A++dcterms%3Asubject+%3Chttp%3A%2F%2Fdbpedia.org%2Fresource%2FCategory%3ANewspapers_published_in_the_United_Kingdom%3E%3B%0D%0A+%3Chttp%3A%2F%2Fdbpedia.org%2Fproperty%2Fpolitical%3E+%3FStance+.+%0D%0AFILTER+%28lang%28%3FStance%29+%3D+%22en%22%29+.+%0D%0AFILTER+%28lang%28%3FNewspaper%29+%3D+%22en%22%29%0D%0A}%0D%0AORDER+BY+%3FStance&debug=on&timeout=&format=application%2Fvnd.ms-excel&CXML_redir_for_subjs=121&CXML_redir_for_hrefs=&save=display&fname=", "table", 1)

The first parameter is our query URL, the second is for pulling our the table elements, and the third is to grab the first of those table elements.

The spreadsheet will populate with all the data that we asked for. Pretty neat!

Now that the data is in a Google Spreadsheet you can do all kinds of things that spreadsheets are good at - one based on our example might be statistics of political stance, or if you modify the query a bit to pull out more than those in the UK then the statistics of those stances based on UK and how that relates to the political parties currently in power.

It also doesn’t have to specifically be data found in DBPedia, it could be anything (business data, science data, personal data etc etc). The key to all of this is the power of URLs, and how they allow the dynamic linking to hyperdata! This is the killer-power of Linked Data.

If you do the above tutorial then let us know if you find anything of interest, and share any experiences you may have.

Resources

- Google Documentation of using ImportXml, ImportHtml and ImportFeed functions in Google Spreadsheets: https://docs.google.com/support/bin/answer.py?answer=75507

- OpenLink Software Documentation of the SPARQL implementation in Virtuoso and its endpoint: https://docs.openlinksw.com/virtuoso/rdfsparql.html

- Kingsley has been adding to demos on his bookmarks page: https://www.delicious.com/kidehen/linked_data_spreadsheet_demo

Back in 2005 when I was first learning about “Agent Oriented Development” I was taught that agents must Perform in some Environment which it manipulates using Actuators and perceives through its Sensors (this is known as PEAS).

What reminded me about this was Kingsley Idehens 2006 blog post on the Dimensions of the Web… which is very much still a valid concept. What came to me though was that if Intelligent Agents are to be truly Autonomous on a Linked Data Web they will probably have to be mobile - and I don’t mean mobile as in developed for mobile devices, what I mean is that they should probably be capable of moving themselves from one computer to another…. otherwise they will just become some kind of “clever” web service which is stored in one place and gets its data from other web services (i.e. we revert back to Dimension 2 in Kingsleys post).

But there is a problem with this model… it sounds a bit like a virus, and something initially quite good could potentially become a bad thing with an evolutionary technique such as Genetic Programming. An intelligent agent capable of adapting to its environment through genetic programming techniques is incredibly powerful, but with great power comes great responsibility.

I have mixed feelings about Singularity philosophy, and I am particularly wary about the Singularity University, however maybe we should be thinking about the ethical/security/identity implications of Autonomous Intelligent Agents on the Linked Data Web.

Some food for thought… on this lovely Monday morning… ;-)

I could do with your opinion…

I’ve been a full Member of the British Computing Society (BCS) since 2007, and I have mentioned in the past to various people that I don’t feel like I don’t get much benefit from it. It is probably one of those things that if you put effort into it then you’ll get benefit back, however nothing incredibly suited to me happens in the Bristol or South West regions of the BCS. One benefit that I may eventually take up is becoming “Chartered” as either an Engineer (CEng) or as an IT Practitioner (CITP), which can be done through the BCS.

The fact that the BCS seems to be targeting the IT Business niche, and trying to keep fingers in a few other pies means that my interest in the society is lacking. Therefore I am seriously considering resigning from the BCS in July, particularly as the cost of maintaining membership is also quite high when I’m trying to save some money so that I can put it into other interests (for personal, business and family interests).

So if I decided to leave the BCS, then I will still be a member of the three year Journeyman Scheme with the Information Technologists’ Company (a Livery Company of the City of London), also known as WCIT. Although the WCIT is quite similar in niche to the BCS, it provides a framework of support and development for its members and is also backed up with lots of lovely history and tradition from the ancient Guilds and Liveries of London. So I shall maintain my affiliation with the WCIT, even though it does cost quite a bit.

If I did resign from the BCS, however, I would feel like I had lost my professional body (and I would not have the postnominals “MBCS” anymore). But there are some alternatives, which might be more suited to my interests, skills, style and political-views and are potentially a lot cheaper than maintaining a membership at the BCS:

- The ACCU - originally a society for C and C++ developers, but has expended its interests into other areas of programming and software development.

- The IEEE Computer Society - an international society with a lot more of a practical feel to it than the BCS, primarily because it is a subsidiary of the Electrical and Electronic Engineering society. It has a huge amount of free stuff for its members, and is good value for the price.

- The ACM - an international society, but mainly based in the USA. It has more of an academic feel to it than the BCS. It has some nice benefits for its members, it isn’t incredibly cheap, but I think it might be cheaper than the BCS. I used to be a student member of the ACM, but decided that the BCS might be better for localised stuff when it came to full membership.

- The IAP - an interesting British society, about the same price as the BCS. It has a very practical feel to it, and some nice simple benefits for its members (particularly those who are consultants).

- The Association for Logic, Language and Information - a rather interesting European society for the bridges between Logic, Language and Information. Sounds quite me. It is a free to join, but it costs to receive their journal.

So, to the reader - what should I do?

- Stay with the BCS and the WCIT, don’t join anything else

- Stay with the BCS, the WCIT and join one of the above (which?)

- Stay with the BCS, the WCIT and join something else (which?)

- Leave the BCS, stay with the WCIT, but don’t join any professional body

- Leave the BCS, stay with the WCIT and join one of the above (which one?)

- Leave the BCS, stay with the WCIT and join something else (what?)

- Leave the BCS, stay with the WCIT, but come back to the BCS in a couple of years, particularly for the CEng/CITP status

What do you think? Do you have any experience of any of the above societies, or have something else to share? Has membership of a professional body helped you to attain/maintain work? Has it benefited you in other ways? Please do share - either publicly using the comments system or privately by email ( daniel [at] vanirsystems [dot] com ).

Thank you,

Daniel

Tim Berners-Lee conceptualised the web when he was at CERN. He built a directory of the people, groups and projects - this was the ENQUIRE project. This eventually morphed into the World Wide Web, or a “Web of Documents”. Documents are indeed “objects”, but often not structurally defined. A great book about this is “Weaving the Web” by Berners-Lee![]() .

.

The boom in this way of thinking came when businesses started to use XML to define “objects” within their processes. Being able to define something and pass it between one system and another was incredibly useful. For instance, I was in a development team quite a while back where we were communicating with BT using XML to describe land-line telephone accounts.

At the same time, people starting developing and using a new flavour of the “web” which was a lot more sociable. We saw the rise of “profiles“, “instant messaging“, “blogging” and “microblogging/broadcasting”. All of which are very easily to understand in terms of “objects”: Person X is described as (alpha, beta, omega) and owns a Blog B which is made up of Posts [p] and a Twitter account T which is made up of Updates [u].

The first reaction to the use of objects for web communications, was to provide developers with object descriptions using XML via “Application Programming Interfaces” (API) using the HyperText Transfer Protocol (HTTP). This was the birth of “Web Services“. Using web services a business could “talk” over the web, and social networks could “grab” data.

Unfortunately this wasn’t enough, as XML does not provide a standardised way of describing things, and also does not provide a standardised way of describing things in a distributed fashion. Distributed description is incredibly useful, it allows things to stay up to date - and not only that - it is philosophically/ethically more sound as people/groups/businesses “keep” their own data objects. The Semantic Web provided the Resource Description Framework (RDF) to deal with this, and the Linked Data initiative extends this effort to its true potential by providing analogues in well-used situations, processes and tools. (For instance, see my previous blog post on how Linked Data is both format and model agnostic).

This is all good. However, we live in an age where Collaboration is essential. With Collaboration comes the ability to edit, and with the ability to edit comes the need to have systems in place that can handle the areas of “identification“, “authentication“, “authorisation” and “trust“. We are starting to see this in systems such as OpenID, OAuth and WebID - particularly when coupled with FOAF [1].

These systems will be essential for getting distributed collaboration (via Linked Data) right, and distributed collaboration should be dealt with by the major Semantic Web / Linked Data software providers - and should be what web developers are thinking about next. Distributed Collaboration is going to be incredibly useful for both business-based and social-based web applications.

Please do feel free to comment if you have any relevant comments, or if you have any links to share relevant to the topic.

Many thanks,

Daniel

Footnotes

- There is some nice information about WebID at the W3C site. Also see FOAF+SSL on the Openlink website, and the WebID protocol page on the OpenLink website.

I decided to give an overview of the best books that you can buy to make yourself well-rounded in terms of Linked Data, the Semantic Web and the Web of Data.

Knowledge Working

Let us first start off with what I like to call “Knowledge Working”, this is essentially the realm of technical knowledge-management and knowledge acquisition/modelling. There are three books that I would promote in this respect.

The first is Information Systems Development: Methodologies, Techniques and Tools![]() written by Avison & Fitzgerald. This book, although it gets down and dirty with some technical detail, can be incredibly useful for an overview to information systems and knowledge design in general.

written by Avison & Fitzgerald. This book, although it gets down and dirty with some technical detail, can be incredibly useful for an overview to information systems and knowledge design in general.

The second is Knowledge Engineering and Management: The CommonKADS Methodology (A Bradford book)![]() by Guus Schreiber et al (aka the CommonKADS team). I was taught from this book on one of my modules during my undergraduate degree, it is less “programmer-based” than the Avison & Fitzgerald book, but has very useful information about acquisition, modelling and analysis of knowledge. I highly recommend this, particularly as it is incredibly useful when coupled with Semantic Web technology.

by Guus Schreiber et al (aka the CommonKADS team). I was taught from this book on one of my modules during my undergraduate degree, it is less “programmer-based” than the Avison & Fitzgerald book, but has very useful information about acquisition, modelling and analysis of knowledge. I highly recommend this, particularly as it is incredibly useful when coupled with Semantic Web technology.

The third book is a new book that we’re waiting to have the second edition of (at the time of writing it was estimated to be available 13th July 2011). This book is Semantic Web for the Working Ontologist: Effective Modeling in RDFS and OWL![]() by Allemang & Hendler (first edition here

by Allemang & Hendler (first edition here![]() ). Allemang and Hendler are heavyweights in the Semantic Web world, with Allemang being chief scientist at TopQuadrant and Hendler being one of the writers of the earliest and most well known articles on “The Semantic Web” (New Scientist: 2001 with Berners-Lee and Lassila). This book is quite technical in places, but it does focus on ontologies and metadata (and metametadata?).

). Allemang and Hendler are heavyweights in the Semantic Web world, with Allemang being chief scientist at TopQuadrant and Hendler being one of the writers of the earliest and most well known articles on “The Semantic Web” (New Scientist: 2001 with Berners-Lee and Lassila). This book is quite technical in places, but it does focus on ontologies and metadata (and metametadata?).

Web Science

Web Science is another important area of interest, particularly in the earlier stages of Linked Data and Semantic Web development and actually applying the theory into practice.

The earliest official book by the Web Science Trust team was A Framework for Web Science (Foundations and Trends in Web Science)![]() by Berners-Lee et al. This is quite an expensive book, and quite academic in style, but useful nonetheless.

by Berners-Lee et al. This is quite an expensive book, and quite academic in style, but useful nonetheless.

You may want to look at something a little cheaper, something a little more practical too. This is where The Web’s Awake: An Introduction to the Field of Web Science and the Concept of Web Life![]() by Tetlow comes in. This interesting book takes a common sense approach to Web Science, I would certainly recommend it.

by Tetlow comes in. This interesting book takes a common sense approach to Web Science, I would certainly recommend it.

Tools and Techniques: The Evolution from the Semantic Web to Linked Data

There are the classics such as Practical RDF![]() by Powers and A Semantic Web Primer

by Powers and A Semantic Web Primer![]() by Antoniou and van Harmelen. There are introductory books such as Semantic Web For Dummies

by Antoniou and van Harmelen. There are introductory books such as Semantic Web For Dummies![]() by Pollock. These are all good foundational books which are recommended, but they often don’t get to the essence of the Semantic Web and especially not Linked Data.

by Pollock. These are all good foundational books which are recommended, but they often don’t get to the essence of the Semantic Web and especially not Linked Data.

For the essential Semantic Web and Linked Data we may want to look at: Programming the Semantic Web![]() by Segaran (who also authored the wonderful Programming Collective Intelligence: Building Smart Web 2.0 Applications

by Segaran (who also authored the wonderful Programming Collective Intelligence: Building Smart Web 2.0 Applications![]() ) et al. Semantic Web Programming

) et al. Semantic Web Programming![]()

by Hebeler (of BBN Technologies) et al. Plus there are the quite useful, although very specific, books Linking Enterprise Data![]() and Linked Data: Evolving the Web into a Global Data Space (Synthesis Lectures on the Semantic Web, Theory and Technology)

and Linked Data: Evolving the Web into a Global Data Space (Synthesis Lectures on the Semantic Web, Theory and Technology)![]() (which is also available free at “Linked Data by Heath and Bizer” ( https://linkeddatabook.com/ ) ).

(which is also available free at “Linked Data by Heath and Bizer” ( https://linkeddatabook.com/ ) ).

Other topics of interest

There are of course other areas of interest which are very relevant to true Linked Data and Semantic Web, these include:

- Semantic Networks and Frames - from the fields of logic and artificial intelligence. This also inspired Object-Oriented theory.

- Pointers and References - yep the ones from programming (such as in C++).

- HyperText Transfer Protocol

- Graph Theory

I hope this list of interesting and useful books is handy, please do comment if you have any other books that you wish to share with us.

Thank you,

Daniel

Many moons ago I wrote a blog post detailing how RDF (one of the semantic web modelling frameworks) is format agnostic, in other words you can write RDF in various languages. These languages, or formats, may include XML (as “RDF/XML“), Turtle (as “RDF/TTL“), Notation 3 (as “RDF/N3“), RDFa (as “XHTML-RDFa“) and N-Triples (as “RDF/NT“) [1].

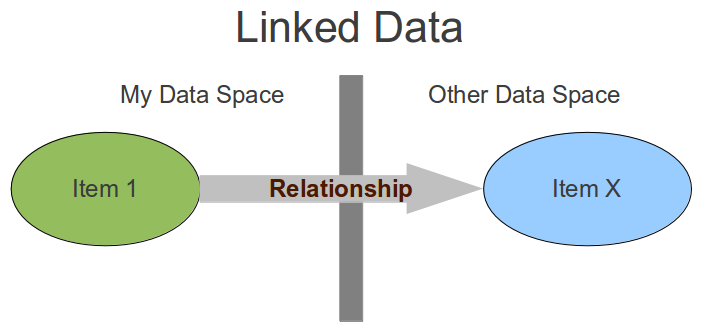

“Linked Data” (the modern Data Web concept) partly started life as an extension to the Semantic Web, basically increasing meaning to data in one space using outbound (and/or inbound) links to data in another space. But the important thing to grasp is that Linked Data is not format dependent, nor is it model dependent as some may believe! [2]

RDF does not have to be used to achieve “Linked Data”. RDF can obviously help in some cases, but in some cases when the data is either very simple or very domain-centric we don’t want to spend too much time trying to make our data fit into (or out of!) the RDF model. Linked Data could very easily be in some other format, such as CSV, JSON, RSS, Atom or even the Microsoft Collection XML format. Whatever data we are dealing with, we must carefully consider:

- What is the right model/framework for the knowledge?

- What is the right format for the data?

- How it fits in with the Linked Data in general?

- How to make it semantic/meaningful by using objective links and subjective lenses?

- How it is going to be used, and whether it needs to be automatically converted to other formats or frameworks (and by doing so, does it (and is it ok to) lose any data and/or semantics)?

It is very much a Knowledge Engineering task

Linked Data is as simple as:

- Please note that RDF/XML and Turtle are, presently, probably the most commonly used Semantic Web data formats.

- For instance Keith Alexander posted a wonderful post on how Linked Data fits in with everything else ( https://blogs.talis.com/platform-consulting/2010/11/11/a-picture-paints-a-dozen-triples/ ), unfortunately the post seems to highlight that RDF is the only way that Linked Data can be modelled - which as I’ve indicated in this blog post is not the case. Keiths blog post, however, is only one way (of many) of making Linked Data - and he does hint at some good ideas.

I hope that this blog post makes some kind of sense, as I believe Linked Data is one of the ways that we can progress the web in a good direction.

Daniel

In the last couple of weeks I’ve been using a programming language (Python) that I’ve not used extensively in the past to work on a unit of a production website, increasing the simplicity of the unit and hopefully also increasing the efficiency (Occam’s Razor stylie?). I have been learning “as I go along”, and with a little help from a borrowed book[1]. It has been fun, and I’ve found myself liking Python a lot more than I was expecting (as I documented in my previous blog post about My Python Learning Curve).

Within the past week I’ve also picked up a small project (almost finished), which was essentially “Web Design“. Those of you who know me, probably know that I’m not really a “designer” but a “developer”. So this has been a bit of a learning curve for me too, actual practical - production level use of CSS (both 2.1 and 3 - focusing on getting it working for Chrome, Webkit, Firefox and IE >= 8), and also some graphical work using the GIMP.

Being freelance means that I’ve been able to focus on learning new things, and doing it quickly - its something that I love. I may, or may not, use the new Python and Design skills in the future - but hopefully it helps me to become a more well-rounded freelancer. Variety is also a spice, and so it has been nice to step away from the PHP/MySQL and Semantic Web stuff that I’ve been doing for quite a while.

The next step is progression. Next week is the beginning of a new tax-year here in the United Kingdom, and I hope that I will be able to progress using some variety (as indicated above), some reuse of my existing skill set, some hard work, some networking and a bit of luck!

Where am I going (i.e. where am I progressing to)? Who knows where the path may take us? but travel it nonetheless in good faith taking decisions when needed.

I have figured out that at any one time I have:

- A favoured set of skills (skills that I already have, some of which might be rusty)

- A set of skills that I’ve recently been using or learning (skills that I have and are refreshed in my mind)

- A set of skills that I want to acquire

- A set of interests which I wish to apply my skills to

So I look for freelance work that will combine as many of the above as possible (so if you can help then please let me know!).

As I am becoming a Journeyman of the Worshipful Company of Information Technologists, I shall be “paired up” with a mentor (someone who has many many more years in industry than I - although I do have quite a few already to be honest). This mentor will hopefully help to guide me on my career path, hopefully help me to avoid pitfalls that I have made in the past and choose good choices to make. I always hope that I can get more involved with the British Computer Society, but haven’t yet found their events particularly interesting (and when they do seem interesting, then they are usually somewhere else at some strange time).

So, time to plan combining skills and interests for the new tax year then!

Footnotes:

- Learning Python by Mark Lutz