Note: This guide is part two of the previous blog post on Importing Linked Data into a Spreadsheet.

Introduction and Theory:

Say you don’t want your data in Google Spreadsheet, but would prefer it in Excel, OpenOffice, LibreOffice or some kind of standalone desktop application on your computer. There is still potential to work with dynamic Linked Data - via the powers of WebDAV (which is a technology allowing the establishment of an “online hard drive” over the protocols that power the world-wide-web).

A WebDAV URL is also a Data Source Name (aka an “address”), you see it is capable of being linked to as it is a URL - it is still Linked Data, and yet it can be treated as a store. This is the one of the many powers of the Linked Data Web.

Once the Method Part One is done there are two options for the tutorial, the first “Part 2″ is dealing with the data in LibreOffice (and I presume that the process is very similar in contemporary versions of OpenOffice), the second “Part 2″ is for dealing with the data in Excel (I’ve used version 2010 on Windows 7).

Prerequisites:

- You will need a copy of Virtuoso on your machine up and running (the enterprise edition and the open source edition should both work). You must also have administrative access to it.

- A new-ish version of LibreOffice, OpenOffice or Microsoft Excel

- An operating system that can cope with WebDAV (which seems to be most of them these days - to varying degrees of success)

An Example Query:

Ideally this method is ideal for fast-paced data, the data that changes often - such as statistics or locations of crime etc. However, for now I’ve just used a simple lat-long search of those “areas” that touch my local area of “Long Ashton and Wraxall”.

SELECT DISTINCT ?TouchesAreaURI, ?TouchesName, ?TouchesAreaLat, ?TouchesAreaLong

WHERE {

<https://data.ordnancesurvey.co.uk/id/7000000000000770> <https://data.ordnancesurvey.co.uk/ontology/spatialrelations/touches> ?TouchesAreaURI .

GRAPH ?TouchesAreaURI {

?TouchesAreaURI <https://www.w3.org/2000/01/rdf-schema#label> ?TouchesName;

<https://www.w3.org/2003/01/geo/wgs84_pos#lat> ?TouchesAreaLat;

<https://www.w3.org/2003/01/geo/wgs84_pos#long> ?TouchesAreaLong

}

}

Method Part One: Generic

As mentioned in the prerequisites - you will need administrative access to Virtuoso in order to fully run through this tutorial. This is because we need to create folders which need to be attached to the SPARQL user in order for the /sparql endpoint to save to WebDAV.

- Administrative Setup:

- Login to Conductor

- Go to System Admin > User Accounts

- Click “Edit” next to the SPARQL user

- Change the following:

- DAV Home Path: /DAV/home/QL/ (you could call the “QL” folder whatever you like - just remember what you’ve changed it to)

- DAV Home Path “create”: Checked

- Default Permissions: all checked

- User Type: SQL/ODBC and WebDAV

- Click Save.

- Go to Web Application Server > Content Management > Repository

- Navigate the WebDAV to: DAV/home/QL (or whatever you named “QL”)

- Click the New Folder Icon (it looks like a folder with an orange splodge on the top-left)

- Make a new folder:

- Name: saved-sparql-results (must not be different!)

- Permissions: all checked

- Folder Type: Dynamic Resources

- Click Create

- Query and Data Setup:

- Hit https://<server>:<port-usually-8890>/sparql

- Enter the SPARQL query (e.g. The Example Query above)

- Change the following:

- Change to a grab everything type query - i.e. “Try to download all referenced resources (this may be very slow and inefficient)”. Or one of the other options - dependent on the locations, data and the query.

- Format Results as: Spreadsheet (or CSV)

- “Display the result and do not save” change this to “Save the result to the DAV and refresh it periodically with the specified name:”

- Add a filename (with file extension). For example testspreadsheet.xls (or testspreadsheet.csv)

- Click “Run Query”

- You’ll know see a “Done” screen, with the URI of the result, this is a WebDAV accessible URL. Please take note of the URL of the “saved-sparql-results”, it should look a little like this: https://<server>:<port-usually-8890>DAV/home/QL/saved-sparql-results

Method Part Two A: For LibreOffice and OpenOffice users

You will need to do the following in order to connect to a WebDAV folder:

- Tools > Options > LibreOffice/OpenOffice > General

- and ensure that “Use LibreOffice/OpenOffice dialogue boxes” is turned on.

You will be linking dynamically from your spreadsheet to the resource on your WebDAV instance:

- Start a new spreadsheet, or load up a spreadsheet where you want the resource to go.

- Go to Insert > Link to External Data

- Click the “…” button

- Enter your “saved-sparql-results” URL (not including the filename itself!), and press enter

- You should now see your “saved-sparql-results” WebDAV directory. Select the file, and click insert. The program will then probably ask you for your dav login details (you may want to make the program remember the details), it may also ask you about the format of the file - just follow that through how you would normally when importing/opening a file. You may also have to select “HTML_all” if you chose the “Spreadsheet option” in the sparql interface.

- Check the “Update every” box, and change the time to a suitable time based on the data.

- Finally, press the “OK” button… and you’ll see your lovely Linked Data inside your spreadsheet. Then you’ll be able to do whatever you want to your data (e.g. create a graph, do some calculations etc etc) - and everything will update when the data is updated. Funky!

Method Part Two B: For Excel users

OK, so I’m not a native Windows user (I used Mac OS and Amiga OS in my childhood, before moving to Unix and Linux based operating systems in about 2001). What I have found is that Windows 7 and Excel go a little strange with WebDAV, they like certain configurations - so I’ll be showing you a reasonably bodgy way of doing this :-P

- Prerequisite: In step 2.c of Generic Method One - save the results as HTML, and make sure the file extension is also .html

- Open Excel (I’m using Excel 2010)

- Click on the Data menu

- Click “From Web”

- In the address bar enter your “saved-sparql-queries” URL, press enter - this will probably ask you to enter your dav username and password

- Click on your <filename>.html file - you should then see the HTML Table

- Press the Import button

- A dialogue will pop up asking about where you would like to place your data - for ease I use the default.

- You’ll see the data! The important thing to note is that this is Linked Data - however, it is not quite self-updating yet. In order to do that we need to set the connection properties… so…

- Select the imported data

- Click “properties” which is in the “Connections” subpanel of the “Data” menu

- Change the “Refresh Every”, and/or check the “Refresh data when opening the file”. Click ok.

- Self-updating Excel spreadsheets from Linked Data. Funky!

Documentation Resources

- Importing Linked Data into a Spreadsheet Part 1 (Google Spreadsheets)

- LibreOffice: “Opening a Document Using WebDAV over HTTPS”

- OpenLink Software Documentation of the SPARQL implementation in Virtuoso and its endpoint

Software Resources

- OpenLink Virtuoso

- Virtuoso Universal Server - Proprietary Edition

- Virtuoso Open Source Edition

- LibreOffice

- OpenOffice

- Microsoft Office

I hope that all works for me, and feel free to share any ideas or findings!

I’ve recently come into contact with the usefulness of the ImportXML() function (and the related ImportHTML() and ImportFeed() functions) found in Google Docs Spreadsheet app [1], and their usefulness is to do with Uniform Resource Locators (URLs). This was partly thanks to Kingsley Idehens example Google Spreadsheet on the “Patriots Players” (and his tweet dated 22nd June at 10:11am), and so I wanted to see how it was done and maybe make something that wasn’t about American Football.

URLs are the actual addresses for Data, they are the “Data Source/Location Names” (DSNs). With the ImportHtml() (et al) functions you just have to plug in a URL and your data is displayed in the spreadsheet. Of course this is dependent on the structure of the data at that address, but you get the picture.

Let me show you an example based on a SPARQL query I created many moons ago:

SELECT DISTINCT ?NewspaperURI ?Newspaper ?Stance WHERE {

?NewspaperURI rdf:type dbpedia-owl:Newspaper ;

rdfs:label ?Newspaper ;

dcterms:subject <https://dbpedia.org/resource/Category:Newspapers_published_in_the_United_Kingdom>;

<https://dbpedia.org/property/political> ?Stance .

FILTER (lang(?Stance) = "en") .

FILTER (lang(?Newspaper) = "en")

}

ORDER BY ?Stance

Briefly, what the above does is shows a Newspaper URI, a Newspaper name and the Newspapers political stance - and these are limited to just those newspapers published in the United Kingdom. The result is a little messy as not all of the newspapers have the same style of label, and some of the newspapers stances will be hidden behind a further set of URIs - but this is just as an example.

Now we can plug this in to a sparql endpoint such as:

- https://dbpedia.org/sparql or

- https://lod.openlinksw.com/sparql

We shall use the 2nd for now - if you hit that in your browser than you’ll be able to plug it into a nicely made HTML form and fire that off to generate an HTML webpage. [2]

However, it isn’t entirely useful as we want to get in into a Google Spreadsheet! So, we need to modify the URL that the form creates. Firstly, copy the URL as it is… for instance…

https://lod.openlinksw.com/sparql?default-graph-uri=&should-sponge=&query=SELECT+DISTINCT+%3FNewspaperURI+%3FNewspaper+%3FStance+WHERE+{%0D%0A%3FNewspaperURI+rdf%3Atype+dbpedia-owl%3ANewspaper+%3B%0D%0A++rdfs%3Alabel+%3FNewspaper+%3B%0D%0A++dcterms%3Asubject+%3Chttp%3A%2F%2Fdbpedia.org%2Fresource%2FCategory%3ANewspapers_published_in_the_United_Kingdom%3E%3B%0D%0A+%3Chttp%3A%2F%2Fdbpedia.org%2Fproperty%2Fpolitical%3E+%3FStance+.+%0D%0AFILTER+%28lang%28%3FStance%29+%3D+%22en%22%29+.+%0D%0AFILTER+%28lang%28%3FNewspaper%29+%3D+%22en%22%29%0D%0A}%0D%0AORDER+BY+%3FStance&debug=on&timeout=&format=text%2Fhtml&CXML_redir_for_subjs=121&CXML_redir_for_hrefs=&save=display&fname=

Then where it says “text%2Fhtml” (which means that it is of text/html type) change it to “application%2Fvnd.ms-excel” (which means that it is of application/vnd.ms-excel type - in other words a spreadsheet-friendly table). In our example this would make the URL look like…

We then need to plug this into our Google Spreadsheet - so open a new spreadsheet, or create a new sheet, or go to where you want to place the data on that sheet.

Then click on one of the cells, and enter the following function:

=ImportHtml("https://lod.openlinksw.com/sparql?default-graph-uri=&should-sponge=&query=SELECT+DISTINCT+%3FNewspaperURI+%3FNewspaper+%3FStance+WHERE+{%0D%0A%3FNewspaperURI+rdf%3Atype+dbpedia-owl%3ANewspaper+%3B%0D%0A++rdfs%3Alabel+%3FNewspaper+%3B%0D%0A++dcterms%3Asubject+%3Chttp%3A%2F%2Fdbpedia.org%2Fresource%2FCategory%3ANewspapers_published_in_the_United_Kingdom%3E%3B%0D%0A+%3Chttp%3A%2F%2Fdbpedia.org%2Fproperty%2Fpolitical%3E+%3FStance+.+%0D%0AFILTER+%28lang%28%3FStance%29+%3D+%22en%22%29+.+%0D%0AFILTER+%28lang%28%3FNewspaper%29+%3D+%22en%22%29%0D%0A}%0D%0AORDER+BY+%3FStance&debug=on&timeout=&format=application%2Fvnd.ms-excel&CXML_redir_for_subjs=121&CXML_redir_for_hrefs=&save=display&fname=", "table", 1)

The first parameter is our query URL, the second is for pulling our the table elements, and the third is to grab the first of those table elements.

The spreadsheet will populate with all the data that we asked for. Pretty neat!

Now that the data is in a Google Spreadsheet you can do all kinds of things that spreadsheets are good at - one based on our example might be statistics of political stance, or if you modify the query a bit to pull out more than those in the UK then the statistics of those stances based on UK and how that relates to the political parties currently in power.

It also doesn’t have to specifically be data found in DBPedia, it could be anything (business data, science data, personal data etc etc). The key to all of this is the power of URLs, and how they allow the dynamic linking to hyperdata! This is the killer-power of Linked Data.

If you do the above tutorial then let us know if you find anything of interest, and share any experiences you may have.

Resources

- Google Documentation of using ImportXml, ImportHtml and ImportFeed functions in Google Spreadsheets: https://docs.google.com/support/bin/answer.py?answer=75507

- OpenLink Software Documentation of the SPARQL implementation in Virtuoso and its endpoint: https://docs.openlinksw.com/virtuoso/rdfsparql.html

- Kingsley has been adding to demos on his bookmarks page: https://www.delicious.com/kidehen/linked_data_spreadsheet_demo

Tim Berners-Lee conceptualised the web when he was at CERN. He built a directory of the people, groups and projects - this was the ENQUIRE project. This eventually morphed into the World Wide Web, or a “Web of Documents”. Documents are indeed “objects”, but often not structurally defined. A great book about this is “Weaving the Web” by Berners-Lee![]() .

.

The boom in this way of thinking came when businesses started to use XML to define “objects” within their processes. Being able to define something and pass it between one system and another was incredibly useful. For instance, I was in a development team quite a while back where we were communicating with BT using XML to describe land-line telephone accounts.

At the same time, people starting developing and using a new flavour of the “web” which was a lot more sociable. We saw the rise of “profiles“, “instant messaging“, “blogging” and “microblogging/broadcasting”. All of which are very easily to understand in terms of “objects”: Person X is described as (alpha, beta, omega) and owns a Blog B which is made up of Posts [p] and a Twitter account T which is made up of Updates [u].

The first reaction to the use of objects for web communications, was to provide developers with object descriptions using XML via “Application Programming Interfaces” (API) using the HyperText Transfer Protocol (HTTP). This was the birth of “Web Services“. Using web services a business could “talk” over the web, and social networks could “grab” data.

Unfortunately this wasn’t enough, as XML does not provide a standardised way of describing things, and also does not provide a standardised way of describing things in a distributed fashion. Distributed description is incredibly useful, it allows things to stay up to date - and not only that - it is philosophically/ethically more sound as people/groups/businesses “keep” their own data objects. The Semantic Web provided the Resource Description Framework (RDF) to deal with this, and the Linked Data initiative extends this effort to its true potential by providing analogues in well-used situations, processes and tools. (For instance, see my previous blog post on how Linked Data is both format and model agnostic).

This is all good. However, we live in an age where Collaboration is essential. With Collaboration comes the ability to edit, and with the ability to edit comes the need to have systems in place that can handle the areas of “identification“, “authentication“, “authorisation” and “trust“. We are starting to see this in systems such as OpenID, OAuth and WebID - particularly when coupled with FOAF [1].

These systems will be essential for getting distributed collaboration (via Linked Data) right, and distributed collaboration should be dealt with by the major Semantic Web / Linked Data software providers - and should be what web developers are thinking about next. Distributed Collaboration is going to be incredibly useful for both business-based and social-based web applications.

Please do feel free to comment if you have any relevant comments, or if you have any links to share relevant to the topic.

Many thanks,

Daniel

Footnotes

- There is some nice information about WebID at the W3C site. Also see FOAF+SSL on the Openlink website, and the WebID protocol page on the OpenLink website.

I decided to give an overview of the best books that you can buy to make yourself well-rounded in terms of Linked Data, the Semantic Web and the Web of Data.

Knowledge Working

Let us first start off with what I like to call “Knowledge Working”, this is essentially the realm of technical knowledge-management and knowledge acquisition/modelling. There are three books that I would promote in this respect.

The first is Information Systems Development: Methodologies, Techniques and Tools![]() written by Avison & Fitzgerald. This book, although it gets down and dirty with some technical detail, can be incredibly useful for an overview to information systems and knowledge design in general.

written by Avison & Fitzgerald. This book, although it gets down and dirty with some technical detail, can be incredibly useful for an overview to information systems and knowledge design in general.

The second is Knowledge Engineering and Management: The CommonKADS Methodology (A Bradford book)![]() by Guus Schreiber et al (aka the CommonKADS team). I was taught from this book on one of my modules during my undergraduate degree, it is less “programmer-based” than the Avison & Fitzgerald book, but has very useful information about acquisition, modelling and analysis of knowledge. I highly recommend this, particularly as it is incredibly useful when coupled with Semantic Web technology.

by Guus Schreiber et al (aka the CommonKADS team). I was taught from this book on one of my modules during my undergraduate degree, it is less “programmer-based” than the Avison & Fitzgerald book, but has very useful information about acquisition, modelling and analysis of knowledge. I highly recommend this, particularly as it is incredibly useful when coupled with Semantic Web technology.

The third book is a new book that we’re waiting to have the second edition of (at the time of writing it was estimated to be available 13th July 2011). This book is Semantic Web for the Working Ontologist: Effective Modeling in RDFS and OWL![]() by Allemang & Hendler (first edition here

by Allemang & Hendler (first edition here![]() ). Allemang and Hendler are heavyweights in the Semantic Web world, with Allemang being chief scientist at TopQuadrant and Hendler being one of the writers of the earliest and most well known articles on “The Semantic Web” (New Scientist: 2001 with Berners-Lee and Lassila). This book is quite technical in places, but it does focus on ontologies and metadata (and metametadata?).

). Allemang and Hendler are heavyweights in the Semantic Web world, with Allemang being chief scientist at TopQuadrant and Hendler being one of the writers of the earliest and most well known articles on “The Semantic Web” (New Scientist: 2001 with Berners-Lee and Lassila). This book is quite technical in places, but it does focus on ontologies and metadata (and metametadata?).

Web Science

Web Science is another important area of interest, particularly in the earlier stages of Linked Data and Semantic Web development and actually applying the theory into practice.

The earliest official book by the Web Science Trust team was A Framework for Web Science (Foundations and Trends in Web Science)![]() by Berners-Lee et al. This is quite an expensive book, and quite academic in style, but useful nonetheless.

by Berners-Lee et al. This is quite an expensive book, and quite academic in style, but useful nonetheless.

You may want to look at something a little cheaper, something a little more practical too. This is where The Web’s Awake: An Introduction to the Field of Web Science and the Concept of Web Life![]() by Tetlow comes in. This interesting book takes a common sense approach to Web Science, I would certainly recommend it.

by Tetlow comes in. This interesting book takes a common sense approach to Web Science, I would certainly recommend it.

Tools and Techniques: The Evolution from the Semantic Web to Linked Data

There are the classics such as Practical RDF![]() by Powers and A Semantic Web Primer

by Powers and A Semantic Web Primer![]() by Antoniou and van Harmelen. There are introductory books such as Semantic Web For Dummies

by Antoniou and van Harmelen. There are introductory books such as Semantic Web For Dummies![]() by Pollock. These are all good foundational books which are recommended, but they often don’t get to the essence of the Semantic Web and especially not Linked Data.

by Pollock. These are all good foundational books which are recommended, but they often don’t get to the essence of the Semantic Web and especially not Linked Data.

For the essential Semantic Web and Linked Data we may want to look at: Programming the Semantic Web![]() by Segaran (who also authored the wonderful Programming Collective Intelligence: Building Smart Web 2.0 Applications

by Segaran (who also authored the wonderful Programming Collective Intelligence: Building Smart Web 2.0 Applications![]() ) et al. Semantic Web Programming

) et al. Semantic Web Programming![]()

by Hebeler (of BBN Technologies) et al. Plus there are the quite useful, although very specific, books Linking Enterprise Data![]() and Linked Data: Evolving the Web into a Global Data Space (Synthesis Lectures on the Semantic Web, Theory and Technology)

and Linked Data: Evolving the Web into a Global Data Space (Synthesis Lectures on the Semantic Web, Theory and Technology)![]() (which is also available free at “Linked Data by Heath and Bizer” ( https://linkeddatabook.com/ ) ).

(which is also available free at “Linked Data by Heath and Bizer” ( https://linkeddatabook.com/ ) ).

Other topics of interest

There are of course other areas of interest which are very relevant to true Linked Data and Semantic Web, these include:

- Semantic Networks and Frames - from the fields of logic and artificial intelligence. This also inspired Object-Oriented theory.

- Pointers and References - yep the ones from programming (such as in C++).

- HyperText Transfer Protocol

- Graph Theory

I hope this list of interesting and useful books is handy, please do comment if you have any other books that you wish to share with us.

Thank you,

Daniel

Many moons ago I wrote a blog post detailing how RDF (one of the semantic web modelling frameworks) is format agnostic, in other words you can write RDF in various languages. These languages, or formats, may include XML (as “RDF/XML“), Turtle (as “RDF/TTL“), Notation 3 (as “RDF/N3“), RDFa (as “XHTML-RDFa“) and N-Triples (as “RDF/NT“) [1].

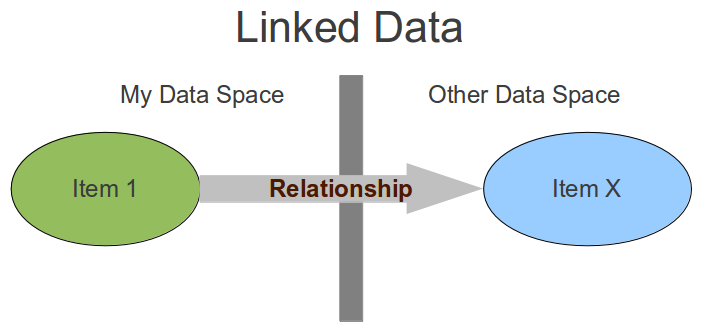

“Linked Data” (the modern Data Web concept) partly started life as an extension to the Semantic Web, basically increasing meaning to data in one space using outbound (and/or inbound) links to data in another space. But the important thing to grasp is that Linked Data is not format dependent, nor is it model dependent as some may believe! [2]

RDF does not have to be used to achieve “Linked Data”. RDF can obviously help in some cases, but in some cases when the data is either very simple or very domain-centric we don’t want to spend too much time trying to make our data fit into (or out of!) the RDF model. Linked Data could very easily be in some other format, such as CSV, JSON, RSS, Atom or even the Microsoft Collection XML format. Whatever data we are dealing with, we must carefully consider:

- What is the right model/framework for the knowledge?

- What is the right format for the data?

- How it fits in with the Linked Data in general?

- How to make it semantic/meaningful by using objective links and subjective lenses?

- How it is going to be used, and whether it needs to be automatically converted to other formats or frameworks (and by doing so, does it (and is it ok to) lose any data and/or semantics)?

It is very much a Knowledge Engineering task

Linked Data is as simple as:

- Please note that RDF/XML and Turtle are, presently, probably the most commonly used Semantic Web data formats.

- For instance Keith Alexander posted a wonderful post on how Linked Data fits in with everything else ( https://blogs.talis.com/platform-consulting/2010/11/11/a-picture-paints-a-dozen-triples/ ), unfortunately the post seems to highlight that RDF is the only way that Linked Data can be modelled - which as I’ve indicated in this blog post is not the case. Keiths blog post, however, is only one way (of many) of making Linked Data - and he does hint at some good ideas.

I hope that this blog post makes some kind of sense, as I believe Linked Data is one of the ways that we can progress the web in a good direction.

Daniel